GDC 2016 has come and gone. Now that the dust has settled and my feet have recovered (as I personally walked well over 25 miles over the course of the week between classes, the expo and numerous meetings with partners) I felt it was important to give you some insights as to what I experienced and learned during the show.

First and foremost, there is no question that the future is bright for real-time model producers, especially on TurboSquid. It was gratifying to hear from everyone we spoke with, that they all use model content from TurboSquid in their work. Whether it was as part of their pre-production testing and R&D, as scaffolding for more style-specific assets, or simply as-is in the final game. It seems that your work is being seen and used by game developers now, and with our new real-time landing page, we expect to see that consumption grow.

On a personal level, it was impressive to see how far the games industry has come from when I last played games religiously (the Quake 3 Arena days to date myself horribly). There were insanely realistic characters and environments that took advantage of the latest real-time shading technologies. Additionally, there were stunning models produced using sophisticated photogrammetry techniques that could be used in an almost infinite number of ways. And all of this content is being produced at a time where the various game engines are all now completely free to download and use, including Unity3D, Unreal Engine, CryEngine and Amazon’s Lumberyard (based on the CryEngine). There are almost no excuses for a 3D artist to not invest in learning one or more of these tools.

Convergence of Content Standards

There is also a convergence happening in terms of real-time content around standards that can’t be ignored and should be on everyone’s radar. Moreover, typical game development cycles and processes are changing, not only to accommodate new real-time experiences (like AR / VR – which we’ll cover in a separate post), but also to allow for less thrash during the creation of models for these groups. With costs of production being a major concern, finding the efficiencies in development has become more important than ever before.

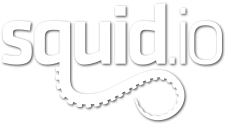

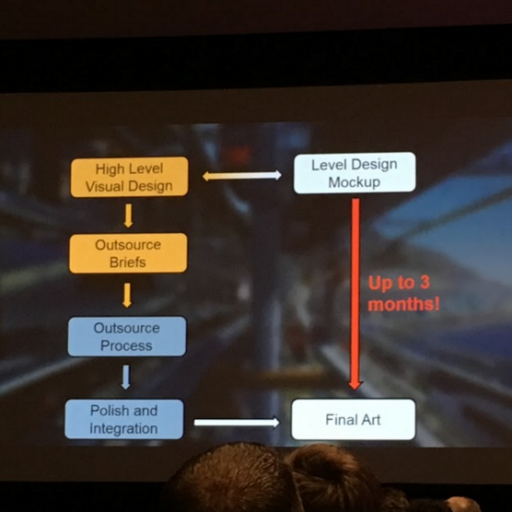

In class after class, we learned that major game developers like Guerilla Games, DICE and Volition all outsourced substantial chunks of their 3D model creation pipelines to 3rd parties to complete. And not just a couple of hundred assets either; it was anywhere from 3,000 to as many as 14,000 art assets that were produced out of house. Why? As we learned, a major reason included the lack of desire to hire/fire artists throughout the creation process since 3D artists were only needed for a portion of the overall development cycle. These companies found that it was more efficient to create comprehensive outsource design briefs and work with facilities that could execute and deliver value. And while it took longer to create the content (upwards of 6 months from style guides to integration), the companies felt that they got to manage the process in a far more controlled manner than if all of the art assets were built internally.

As the Guerilla Games presenter said, “We want to focus on making great games, not art assets.”

PBR, I repeat, PBR

Additionally, the letters PBR came up repeatedly as the go-to standard for material creation for real-time model content.1 Physically Based Rendering allows 3D modelers to make materials that are consistent and adhere to real-world shading models, regardless of the environment they are placed in. A common problem in a traditional development pipeline was that you might have two different artists creating brick materials for different parts of a single environment, but that when both materials were put into the same lighting, one or both looked terrible, and this caused lots of lost time for reworks. PBR workflows, by contrast, allow materials to be “calibrated” to their real world counterparts (are they a dielectric or metal material?), measured and thus built to ensure consistency, even across lighting and platforms; from indie games all the way up to high-end film projects.

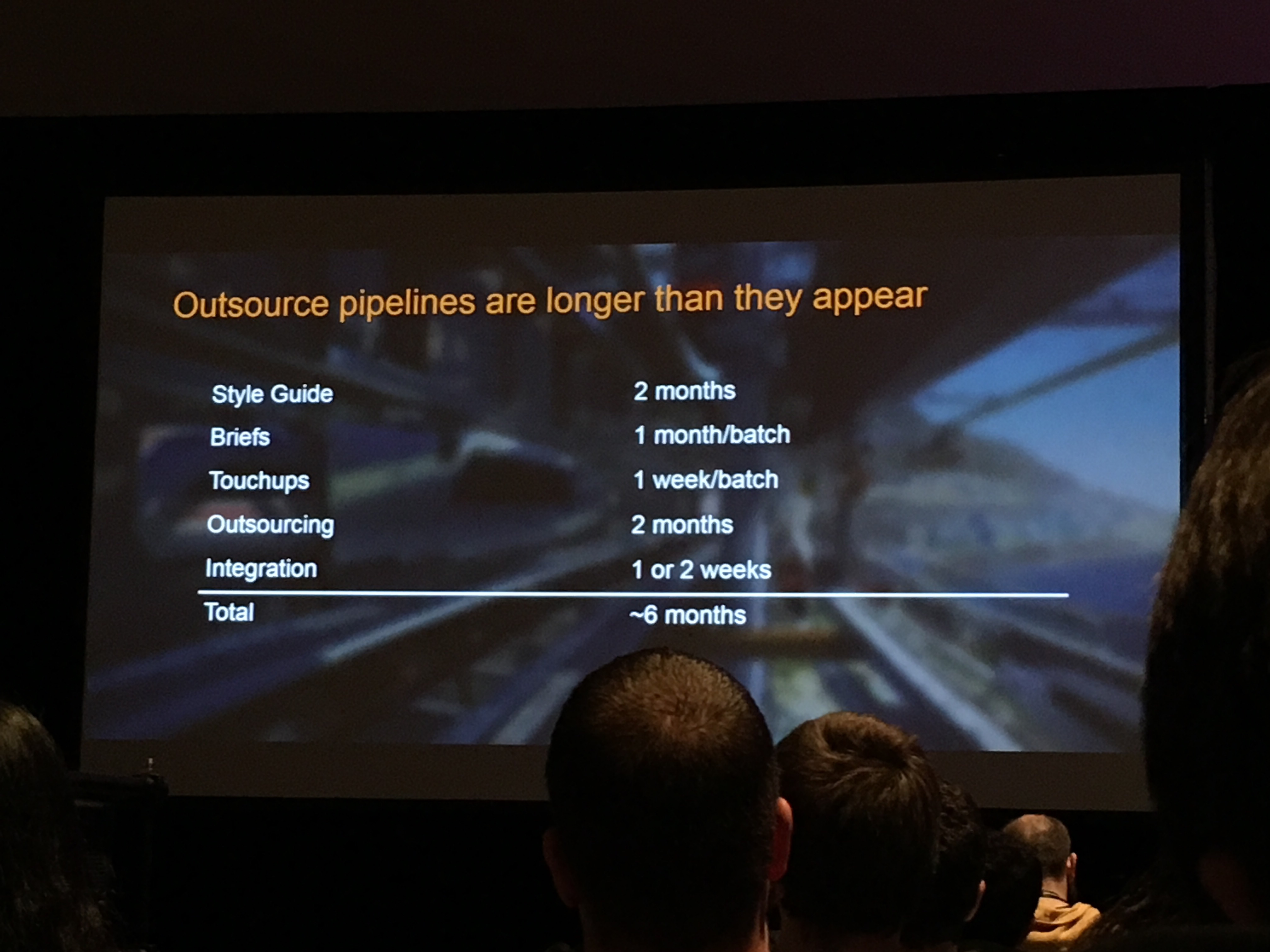

We attended a number of classes, from Naughty Dog’s PBR texturing pipeline for Uncharted 4 to Allegorithmic and nVidia’s compelling class on PBR and MDL (nVidia’s Material Definition Language) on why PBR should matter to all artists. We heard it again and again; using PBR materials provide artists with Flexibility in creation, Consistency with validation tools and Portability from platform to platform. Build it once, and then re-use it again and again.

What’s more, we learned that many PBR elements already exist within your current toolset – from iRay to V-Ray to RenderMan and its adoption is growing. It’s already part of the Allegorithmic Tools (Substance Designer 5 and Substance Painter 2) as well as real-time viewers like Marmoset Toolbag 2. In meetings with both of these companies during the show, we certainly agreed that there are ways to make artists’ lives easier by adopting this sort of standardized workflow. Now we need to take the next steps to make that a reality.

So, What Do We Do Now?

So what does this mean for TurboSquid artists? Essentially that if you’re interested in building models for real-time usage, that you can take full advantage of the fact that all of the major game engines are free to download and learn. Do it. That may seem daunting, but you should know that you won’t have to learn completely alien workflows to build content for real-time, and that your tools and rendering tech will still apply.

Internally, we’re already looking at a number of ways to provide even more value to the games industry based on their feedback to us during the show, and we will be posting more about those initiatives later on this year. In the meantime, if you have questions about what game engines you should look at to learn or where to start, don’t forget that we have a shiny new forum where you can post your questions and provide feedback on your experiences.

As a final aside, for all TurboSquid artists unfamiliar with PBR, we’d highly recommend looking at the two-part PBR guide that Allegorithmic produced on this subject to learn more. And if you are familiar with PBR workflows, but haven’t seen these documents before, then I’d also recommend you expand your knowledge even further. 🙂

Part 1: The Theory of Physically Based Rendering

Part 2: Practical Guidelines for PBR Texturing

1 – To be fair, while PBR materials and workflows are a substantial portion of game development now, we also learned that the mobile games industry really doesn’t use it as much (due to lack of processing power at present – though we have no doubt that won’t be the case for long), and that PBR’s use for VR is still a work in progress.